<Blog />

Testing JavaScript Applications

March 09, 2019

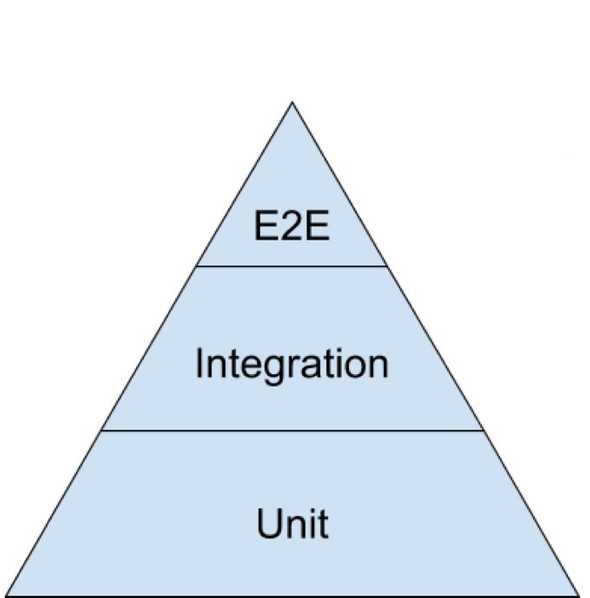

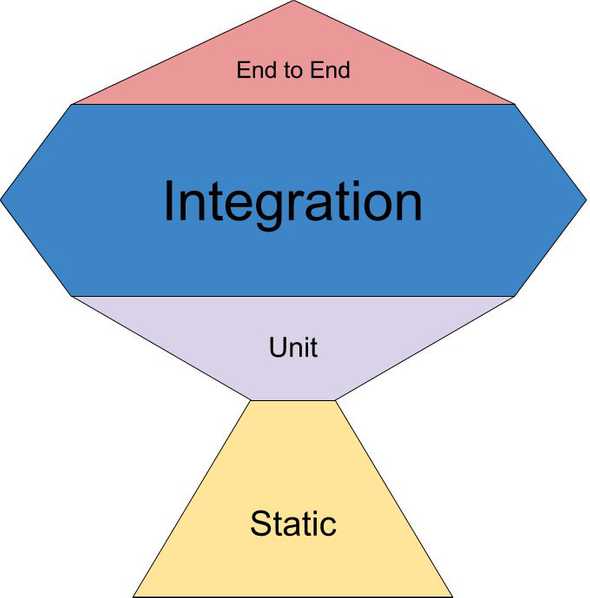

In 2018, I doubled down on my testing game. Going into the year, I was primarily writing unit tests, with Jasmine as my go-to test runner. In backend tests I was using Sinon for spies, stubs and mocks; in frontend tests I was using Sinon in addition to Enzyme for testing React components. I was also using an ESLint editor plugin with a rigorous set of rules. While this was all—in theory—“good” (the 2018 Frontend Tooling Survey showed that 75% of respondents were using a linter, but only 54% were writing tests), it ultimately ranked about halfway up both the canonical testing pyramid and Kent C. Dodds’ revamped testing trophy:

Code was being tested, but not necessarily well tested. Integration tests were minimal; end-to-end tests were missing. Code was being linted as it was written, but not on commit or deployment. Code coverage wasn’t being reviewed. I tended to have more confidence in backend tests than in frontend ones, due in part to following some common Enzyme testing practices: shallow rendering, manipulating component instances (props and state) and using implementation details to make assertions (DOM elements, class names, component display names, etc.). Ultimately, too many of my test suites were potential meme fodder:

Below is an overview of my evolved approach to testing JavaScript applications. Also take a look at the demo app on GitHub, which puts it into practice.

nota bene: Testing is an immense topic; what follows is intended to be a modest contribution. It’s written standing on the shoulders of giants (see references below). It contains opinions and suggestions; testing lacks hard and fast rules. The JavaScript ecosystem also marches on; tools come and go, and testing is no exception.

Table of Contents

Motivation

There are many reasons to write tests, but—for me—the biggest ones are:

-

Quality

Code must work. Tests help ensure that good programing practices are followed and project requirements are met.

-

Maintainability

Code changes over time. Tests will save you effort over the life of a project.

Together, the combination of quality and maintainability enforced by a self-testing codebase provides considerable confidence when adding a feature, updating dependencies, refactoring, debugging, deploying and more.

Types of Tests

Not all tests are created equal. The type of test used in a given case is typically a tradeoff between cost (i.e., how much effort they take to configure, write and maintain) and speed (i.e., how long they take to run).

Static

Cost: 💵

Speed: 🏎🏎🏎🏎

Not tests per se, static analysis includes linting and type checking. Linters check code for problematic patterns or syntax that doesn’t adhere to certain style guidelines. Type checkers add syntax for specifying data types, which are then used to verify correct usage throughout the codebase.

Unit

Cost: 💵💵

Speed: 🏎🏎🏎

Unit tests are small in scope, validating an isolated functionality (e.g., a controller method). All dependencies are mocked, which makes unit tests capable of being run in parallel, thereby increasing their speed. Unit tests are useful for covering things like branching logic and error handling in one piece of an application.

Integration

Cost: 💵💵💵

Speed: 🏎🏎

Integration tests are medium in scope, validating a subset of functionalities (e.g., a view UI or an API endpoint). Less dependencies are mocked than in unit tests; the amount of mocking dictates if an integration test suite can be run in parallel or serially. Integration tests are useful for covering the interaction between adjacent pieces of an application.

End-to-end

Cost: 💵💵💵💵

Speed: 🏎

End-to-end tests are large in scope, validating a superset of functionalities (e.g., simulating a pattern of user behavior in the browser). No dependencies are mocked, which requires end-to-end tests to be run serially against a running server, thereby decreasing their speed. End-to-end tests are useful for covering an application holistically.

Testing Tools

There is an abundance of testing tools in the JavaScript ecosystem these days. The ones mentioned below have provided me with robust solutions for each type of test.

Static

ESLint is the preeminent JavaScript linting utility; pluggability is its biggest advantage. Prettier is an excellent companion for formatting. eslint-config-airbnb and eslint-config-prettier are two popular configs, both of which I’ve rolled into my own config. lint-staged is also a handy tool for adding linting and format checking to a pre-commit hook.

TypeScript and Flow are the most popular JavaScript typecheckers, with TypeScript really taking off in the back half of 2018.

nota bene: At the time of writing this post, adding typechecking to a JavaScript application entails a bit more complexity than a linter (read more about some of the tradeoffs here); for simplicity, I didn’t include it in the demo app. I have used Flow in a few applications, but haven’t worked meaningfully with TypeScript. That being said, the January - June 2019 TypeScript Roadmap and projects like deno are all very exciting; I plan on doing more with TypeScript this year.

Unit & Integration

Jest is a batteries-included test runner, and it’s replaced Jasmine in my testing toolbox. Some of its best features include an easily extensible configuration, watch mode, powerful mocking capabilities and built-in Istanbul code coverage reporting. It’s also complemented by a number of awesome packages and resources, jest-dom being one of my favorites for frontend tests.

react-testing-library, which is a React-specific wrapper around dom-testing-library, has really changed how I write frontend tests. It provides utilities that facilitate querying the DOM in the same way a user would, thereby encouraging both accessibility and good testing practices.

End-to-end

Cypress is an exceptional browser testing tool. It’s simple to install. Writing, reading and debugging tests is easy. Its documentation is best-in-class. Tests run reliably in both a Chromium-based GUI (with time travel) and a headless CLI. cypress-testing-library is a good companion, adding dom-testing-library’s methods as custom commands.

Guiding Principles

When performing any systematic process, it’s instructive to have a set of ideas that inform your actions throughout. Here are my guiding principles for writing tests:

Writing tests isn’t optional; it’s a prerequisite for professional software. Quality is more important than quantity. Integration tests often give you the most bang for your buck.

The code you’re testing has a contract; validate what it should do, not how it does that thing.

- “The more your tests resemble the way your software is used, the more confidence they can give you.”

Testing is about building confidence in your codebase; maximize this confidence by making actions and assertions that approximate a consumer of your code.

Mental Models

I find mental models useful for framing tasks. Here are a few that I routinely apply when writing tests:

Atoms, Elements & Organisms

Atomic theory posits that matter is made up of indivisible particles called atoms. A collection of atoms forms a molecule. A collection of molecules forms an organism.

Unit test atoms. Integration test molecules. End-to-end test organisms.

Use Cases

A use case outlines an interaction between a user and an application. Each use case typically has a happy path and one or more edge cases.

Cover the happy path in end-to-end tests. Cover likely edge cases in integration tests. Cover unlikely edge cases in unit tests.

Critical Control Points

A critical control point is a step in a process where the consequences of a failure could be catastrophic.

Thoroughly test critical control points.

Diminishing Returns

The law of diminishing returns states that, after a certain point, adding more input actually yields less output.

Write tests for the sake of added confidence, not added coverage.

Writing Tests

My approach to writing tests for an application depends on the portion being tested, which in turn directs the type of test that’s used.

nota bene: This section references the demo app. Scripts can be found in the package.json file. Organizing and naming tests is largely a matter of preference. For organizational purposes, I’ve co-located everything related to testing in a top-level test directory. For nomenclature purposes, I’ve included the type of test in each test’s file name (i.e., *.unit.spec.js, *.integration.spec.js and *.e2e.spec.js).

Static

I consider tests to be a part of the codebase. Therefore, all source and test code is statically analyzed. I like to develop with Eslint and Prettier editor plugins, which give me instant feedback and auto-formatting on save. Additionally, lint, format:check and format:fix scripts ensure consistency after development.

Unit & Integration

A collection of unit and integration tests that can be run in parallel comprise the majority of my tests. They can be run with the test script, which runs each test once, or the test:watch script, which runs tests related to changed files each time a change is made. Additionally, a coverage script can be run to ensure that my tests are thorough and sifting. How I go about writing these tests differs from backend to frontend code.

Backend

For backend code, I primarily write unit tests. My goal in these tests is to validate discrete input/output relationships resulting from conditional logic, typically in controller and helper methods.

For example:

-

A unit test for a view controller (source) covering all likely user authentication/authorization scenarios.

-

A unit test for an API endpoint controller (source) covering all likely user authentication/authorization scenarios and an unlikely model error scenario.

-

A unit test for a password helper (source) covering all likely validation scenarios.

I find describe blocks useful for organizing logical branches, and the arrange/act/assert pattern useful for structuring individual test blocks.

nota bene: Both controller unit tests utilize helpers to reduce repetition across similar tests, which can be found in the controller-test-helpers directory.

Frontend

For frontend code, I primarily write integration tests. My goal in these tests is to validate DOM output resulting from a combination of likely API response and user interaction scenarios.

Take the Manage Users view (source) as an example, which renders a list of users in addition to providing a means of creating, updating and deleting users. To test the UI for this view, I start with an integration test that mounts the <Root/> component via a custom renderer exposing react-testing-library’s utilities as well as some additional helpers for common actions and assertions. The test cases, which are written in the same way a manual tester would perform them, cover the following scenarios:

-

Initial list of users

-

With successful fetch

- Returning user data

- Returning no user data

-

With unsuccessful fetch (500 Internal Server Error)

-

-

Creating a user

- Resulting in success

- Resulting in error (409 Conflict)

-

Updating a user

- Resulting in success

- Resulting in error (422 Unprocessable Entity)

-

Deleting a user

- Resulting in success

- Resulting in error (404 Not Found)

Collectively, these scenarios exercise the majority of the UI’s functionality, in addition to simulating all of the errors that the API can return. Integration testing the entire component tree in this way not only covers a lot of code, it also gives me more confidence that everything is working together properly than testing each component in isolation would. That being said, it doesn’t cover everything, so I augment the integration test with a few unit tests covering:

- Validation on the create user form (unit test)

- Validation and optional password on the update user form (unit test)

- Admin users cannot be deleted (unit test)

End-to-end

While end-to-end tests are the slowest type of test because they must be run serially against a running server, they also provide the highest level of confidence. End-to-end tests cover the entire application, including gaps left by other types of tests, such as the interactions between controllers and models, which are mocked out in unit tests, and the interactions between UIs and APIs, which are mocked out in integration tests. Additionally, because end-to-end tests run in a browser environment, they’re a good place to cover routers, which are tightly coupled to HTTP requests.

End-to-end tests can be run in headless mode with the test:e2e command or in interactive mode with the test:e2e:interactive command. Organizationally, I like to structure them as follows:

-

Authentication, which cover the entire login and logout flows as well as access to views requiring user authentication.

-

Authorization, which cover access to views requiring user authorization.

-

Shared, which cover elements that are present on all of the views (e.g., the navbar and footer).

-

Views, which cover the happy paths for each view.

Structuring end-to-end tests in this way is inspired by this talk by Brian Mann (the creator of Cypress), which covers organization as well as some other best practices for testing with Cypress.

Test Suites

Tests can only provide confidence if they’re run regularly. Therefore, it’s a good practice to build test execution into your workflows.

nota bene: This section also references scripts from the demo app.

Precommit

The precommit script is run at the end of the development workflow (i.e., upon running git commit). It lints and format checks staged files in addition to running the unit and integration tests.

Validate

The validate script is run at the beginning of the build workflow (i.e., as part of a CI pipeline). It lints and format checks the entire codebase in addition to running the unit, integration and headless end-to-end tests.

References

The following people have helped shape my thinking about software testing.

Kent Beck

Martin Fowler

- Blog

- This five part video series, which is an ongoing conversation about testing with Kent Beck and David Heinemeier Hansson

David Heinemeier Hansson

Mattias Petter Johansson

Kent C. Dodds

Feedback

Have questions, comments or suggestions? Reach out to me on Twitter (@colinrbrooks).

— Colin